“I came here to chew bubble gum and demo the next LTS. And I’m all out of chewing gum.”

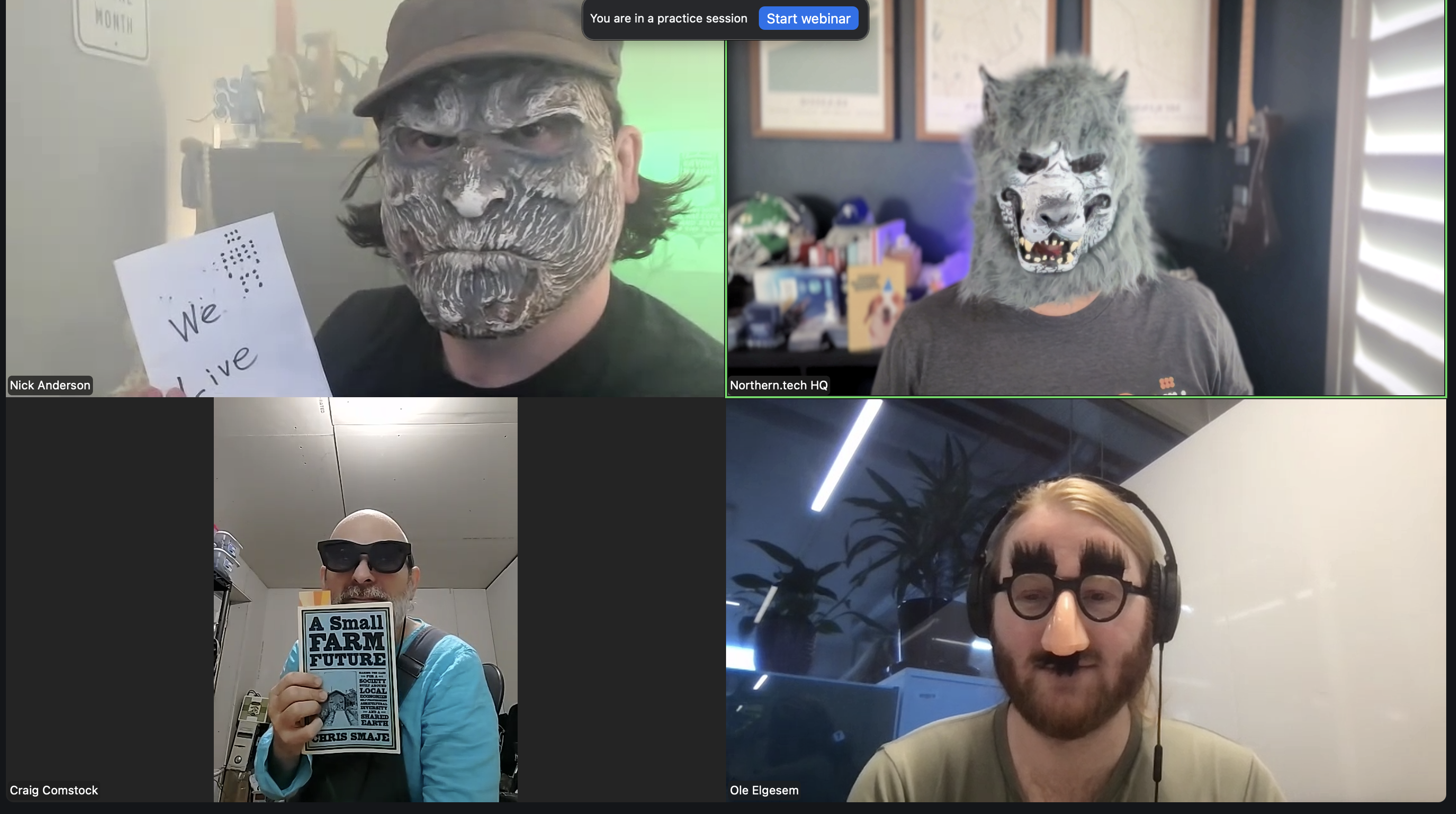

Herman joined Cody, Craig and Nick to give a sneak peek of the upcoming CFEngine 3.27 LTS release. Herman shows off some of the new features, including:

- An AI agent to query your infrastructure using natural language.

- Audit log improvements.

- A new first-time setup experience for Mission Portal.

- An enhanced Mission Portal UI.

- New policy language functions like

getgroups(),getgroupinfo(),findlocalgroups(),getacls(),isconnectable(),isnewerthantime(), andclassfilterdata()(which we covered in The agent is in - Episode 51 - Data-driven configuration with classfilterdata()). - A new

evaluation_ordercontrol to run promises in a top-down order.

We also answer some great questions from the audience about custom promise modules, nested groups, and AI model integration.

Video

The video recording is available on YouTube:

At the end of every webinar, we stop the recording for a nice and relaxed, off-the-record chat with attendees. Join the next webinar to not miss this discussion.

Post show discussion

Custom promise types: Global vs. per-promise configuration?

A question arose regarding custom promise types and whether their interpreter and path configurations could be set globally rather than per promise. Nick and Herman clarified that the convention is to declare custom promise types in init.cf. Once instantiated there, they should be globally known and not require redefinition in every promise.

AI in restrictive environments: On-premise models?

A user inquired about using the AI agent with in-house, on-premise AI models due to security requirements, rather than public services like Google Gemini or Copilot. Herman confirmed that CFEngine currently supports two options for local/on-premise models:

- OpenAI Like: This option allows connecting to any model that speaks the OpenAI API.

- Ollama: Direct support for Olama-compatible models.

He encouraged users to provide feedback if their specific in-house models don’t fit these categories.

Links

- Connect w/ Cody, Craig, Herman, or Nick

- All Episodes

- New Function Documentation